Follow this 16-step SEO Audit checklist to find SEO errors and boost your Google rankings.

Google’s algorithm now has over 200 ranking factors, and they seem to be adding more every month.

Does this mean that an SEO Audit needs more than 200 steps to be any good? Does it need to take weeks and hundreds of man-hours?

No, it doesn’t.

Google weighs each factor differently, and by focusing on the most critical factors, you can get over 90% of the results with less than 10% of the effort.

Here's a list of what we will be covering in our SEO audit:

- Decide how SEO fits into your overall marketing strategy

- Crawl your website and fix technical errors

- Remove low-quality content

- Using Robots.txt and Robots Meta Tags to resolve technical issues

- Check your page speed and load times

- Test your mobile-friendliness

- Elevate your Core Web Vitals

- Get rid of structured data errors

- Test and rewrite your title tags and meta descriptions

- Analyze keywords and organic traffic

- Learn from your competition

- Improve content with an on-page SEO audit

- Analyze and optimize your existing internal links

- Improve your backlink strategy

- Reformatting URLs and 301 redirects

- Track your site audit results

If you’re looking for a simple approach to an SEO audit, check out the video below, and if you’re just starting to learn about SEO, check out our what is SEO guide so you can get started on earning more organic traffic for your website. You can follow along with everything we do with our downloadable SEO audit checklist.

SEO Audit Tools You Might Need

Before we jump into the SEO audit process, there are a few tools that you’ll want to experiment with to help make this entire process go as smoothly as possible. Are they required to overhaul your site from an SEO audit point of view? Not necessarily — but they will make the process far easier and more effective.

We created a separate, comprehensive guide to SEO audit tools so that we could explore pros, cons, and more detailed explanations of each one. Take time to review their strengths. Each tool brings a different capability to the table, covering factors like indexing, site speed performance, and link analysis.

With your tools assembled, we can move on to auditing your website and boosting your organic traffic.

#1 Decide How SEO Fits Into Your Overall Marketing Strategy

SEO/SEM is no longer the end-all-be-all of digital marketing, so you can’t expect SEO to do 100% of everything when you might only spend 30% of your energy and money on it. For this reason, before you perform your SEO audit, decide what actions you are trying to drive with your SEO strategy.

One simple question that will help you decide this is:

When do you want your content to reach your customers?

- Before they know they are interested in a product/service. (Top-of-funnel content)

- When they are researching/comparing alternatives. (Middle-of-funnel content)

- Right before the point of sale. (Bottom-of-funnel content)

Once you know what part of the funnel your SEO will support, you can find an SEO strategy that works for your company, and everything from here on out gets a whole lot easier. You’ll be able to evaluate your content’s performance in light of relevant metrics, understand where your content gaps are, and complete this audit in a targeted way that will get you real results.

If you look at this question and answer all three, you need to start setting priorities. Ideally, a piece of content will only be aimed at one of these parts of the funnel. Do you have time to be churning out content at a pace that can keep up with these three different priorities? If not, pick the one most important to you, focus on that for now, and see if you can expand the role of content into other areas of your funnel further down the line.

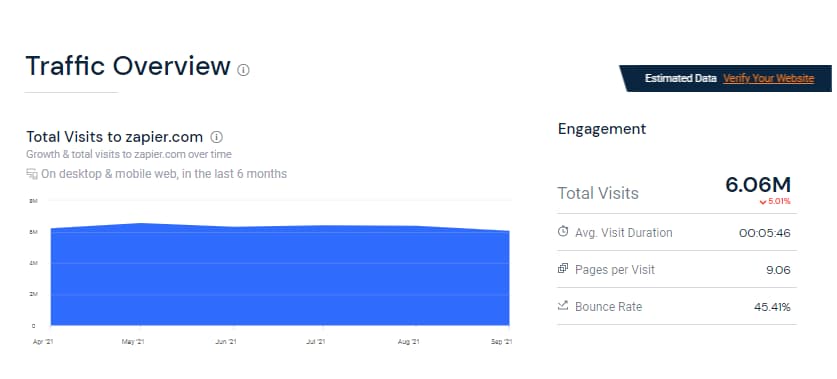

A great example of dedication to a specific step in the buyer’s cycle for their SEO strategy is Zapier, which gets more than 6 million visits every month.

They achieve this by understanding that their SEO goal is to drive awareness and discovery through TOFU content. The majority of their 25,000 landing pages focus on people searching for integrations between two pieces of software and then showing them how Zapier can help integrate them.

SERPs like this are the bread and butter of Zapier, a business that reached $140 million in annual recurring revenue. Every time they partner with a new company, they make several integrations pages.

They make it easy to rank because partners are encouraged to link to their unique integration page.

With the added cash flow, they eventually added a vibrant company blog and other elements to their SEO & content strategy, but this simple strategy generated millions of dollars.

If you know when you want to reach customers via organic search and create a strategy around that, you significantly increase your chances of success.

#2 Crawl Your Website and Fix Technical Errors

Even today, fundamental technical SEO issues are more common than you’d like to think, and one sure way to find these issues is by crawling your website.

Of analyzed web pages, 50% had duplicate content and indexation issues, 45% had broken image and alt tag issues, and 35% had broken links.

There is a wide range of free and paid tools you can use to crawl your site. We recommend using Screaming Frog’s SEO Spider to kick off your SEO audit (it’s free for the first 500 URLs and then £149/year after that).

Once you’ve signed up for an account, it’s time to select your crawl configuration. You need to configure your crawler to behave like the search engine you’re focused on (Googlebot, Bingbot, etc.). You can select your user agent by clicking on configuration and then selecting user agent in Screaming Frog (only available in the paid version).

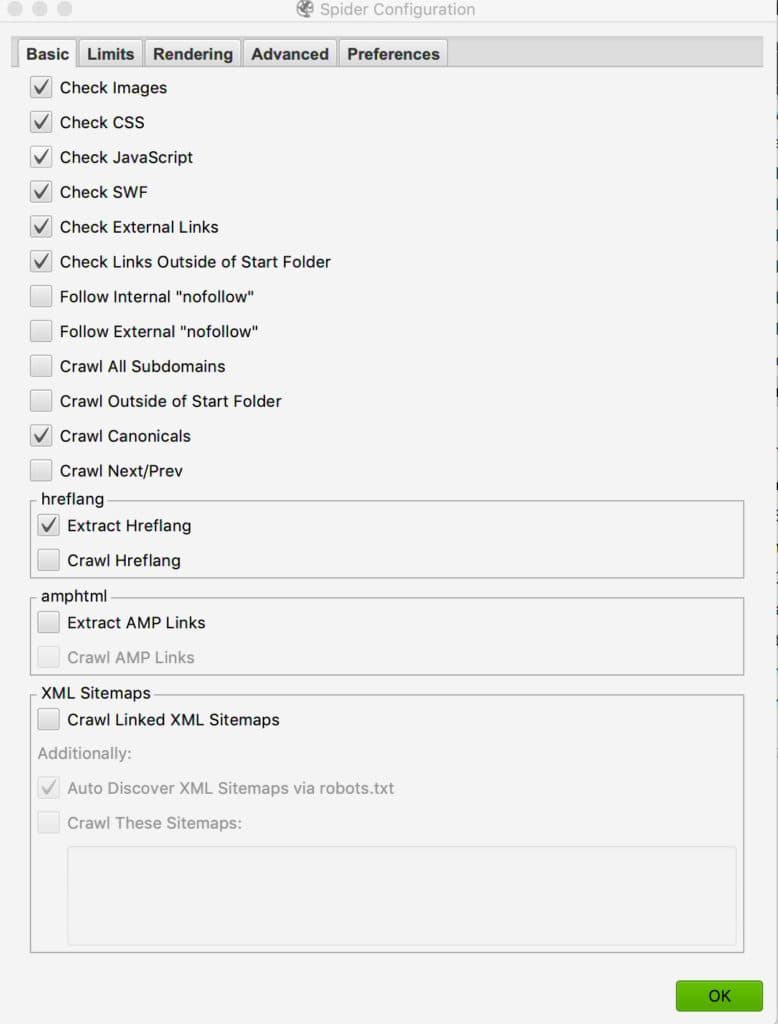

Next, you want to decide how the spider should behave. You can do this by selecting “spider” from the configuration menu in Screaming Frog. Then, you need to decide whether you want the spider to check images, CSS, JavaScript, Canonicals, or a host of other options. We suggest allowing the spider to access all of the above (we will share our setup below).

If you have a site that relies a lot on JavaScript (like SpyFu), you’d want to make sure the spider can render your pages correctly. To do that, click on the Rendering tab, and select JavaScript from the drop-down. You can also enable rendered page screenshots to ensure that your pages are rendering correctly.

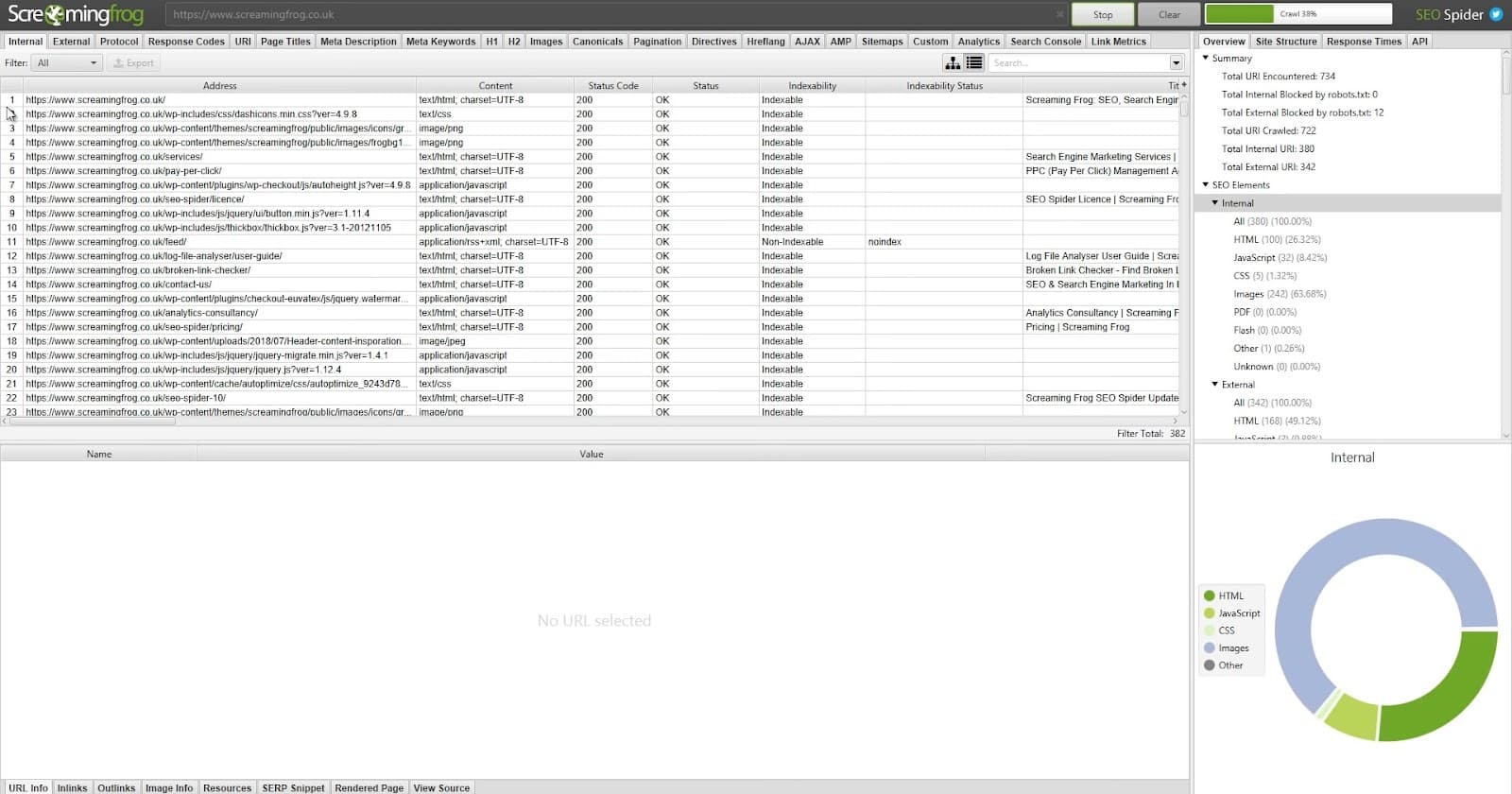

When everything is finalized, enter your URL and click “Start.” Depending on the size of your domain, this could take quite a while — so remember, patience is a virtue.

The result should look something like this, with a large table of the crawled webpages and information about each one in the table’s columns.

Use this information to find problems that you can fix for quick wins in your technical SEO audit. This can include:

And since it identifies the specific URL for each problem, you can go in and fix them one by one.

How to Fix a Common Duplicate Content Issue in WordPress

Duplicate content is a common technical SEO issue often caused by the content structure coded into your CMS (Content Management System). For example, if you use WordPress, it publishes category pages by default. When you create separate category pages, Google might index both of them, leading to duplicate content in the SERPs.

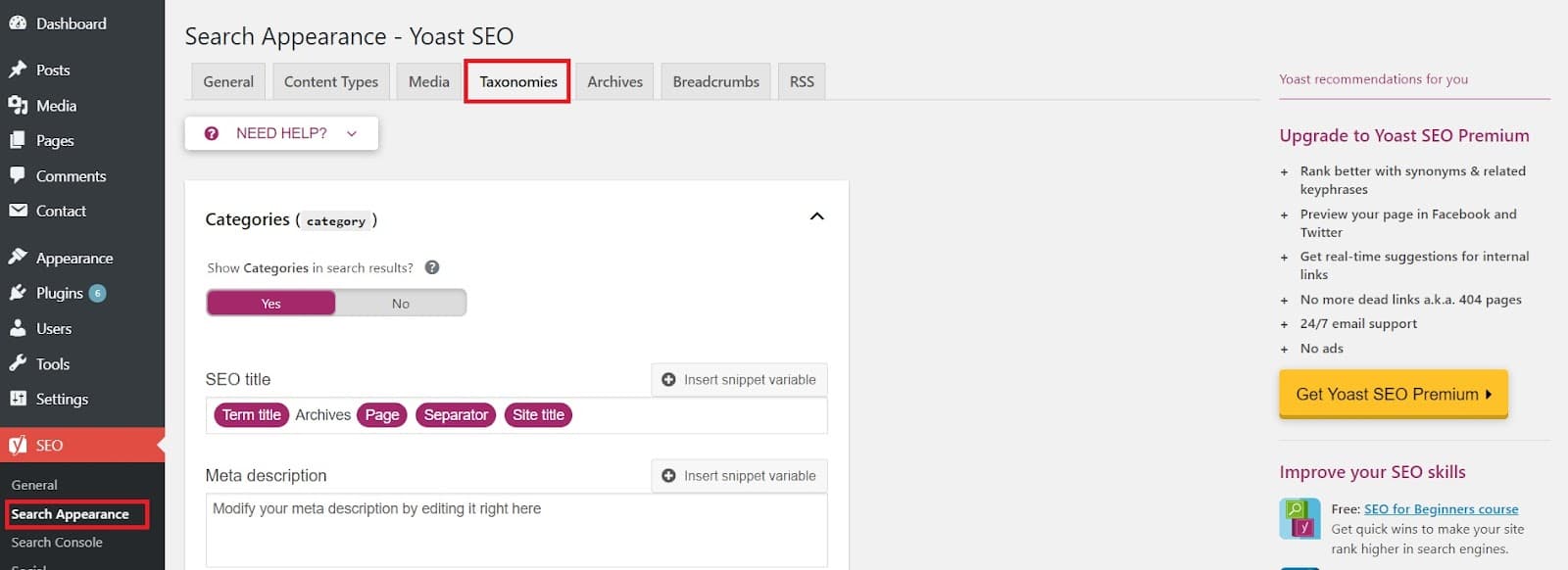

If you have the SEO by Yoast plugin installed, you can easily hide category and other taxonomy pages from the search results.

Go to SEO/Search Appearance/Taxonomies in the WordPress dashboard, and change the settings for categories and tags.

If your category pages are getting flagged for duplicate content, you should set the “Show Categories in search results” setting to “No.”

When you’re done fixing this issue and any others flagged by your crawler, recrawl your site to make sure that you’ve resolved all of the issues and move on to our next step.

Duplicate Versions of Your Site

This one is a common issue and, fortunately, an easy fix. We might tell someone that our site is found at spyfu.com, but our site is really at https://www.spyfu.com. In fact, any of these variations get to our page:

- spyfu.com

- www.spyfu.com

- http://spyfu.com

- http://www.spyfu.com

- https://spyfu.com

- https://www.spyfu.com

Google sees these as 6 individual websites. We're not going to drop them because users expect to type one of these variations, and we want to get them to the site. The solution for both of those is to use a 301 redirect on 5 of those options so that they all point to the actual domain.

#3 Remove Excess and Low-Quality Content

While it seems counterintuitive, Google has said they don’t value the frequency of posting or volume of content as a ranking factor.

(Source)

(Source)

And it’s not just smoke and mirrors. Over the last few years, sites have managed to improve their search rankings by actually removing thousands of pages from search results. This strategy, sometimes called Content Pruning, can lead to significant traffic increases as big as 44%.

Just as with regular pruning, Content Pruning is a process where you remove the unnecessary to maximize results.

Remove pages that serve little purpose and that don’t adequately answer the search queries of Google searches.

While you should consider removing problematic pages discovered with the SEO tool in the last step, tools often don’t pick up on the majority of problem pages. So you have to do some manual searches.

What better place to start than Google itself? Put “site:(website URL)” in the Google search engine, and you’ll get every page Google has indexed for your site. Check how many pages Google has indexed, and if the number is outrageous, start pruning.

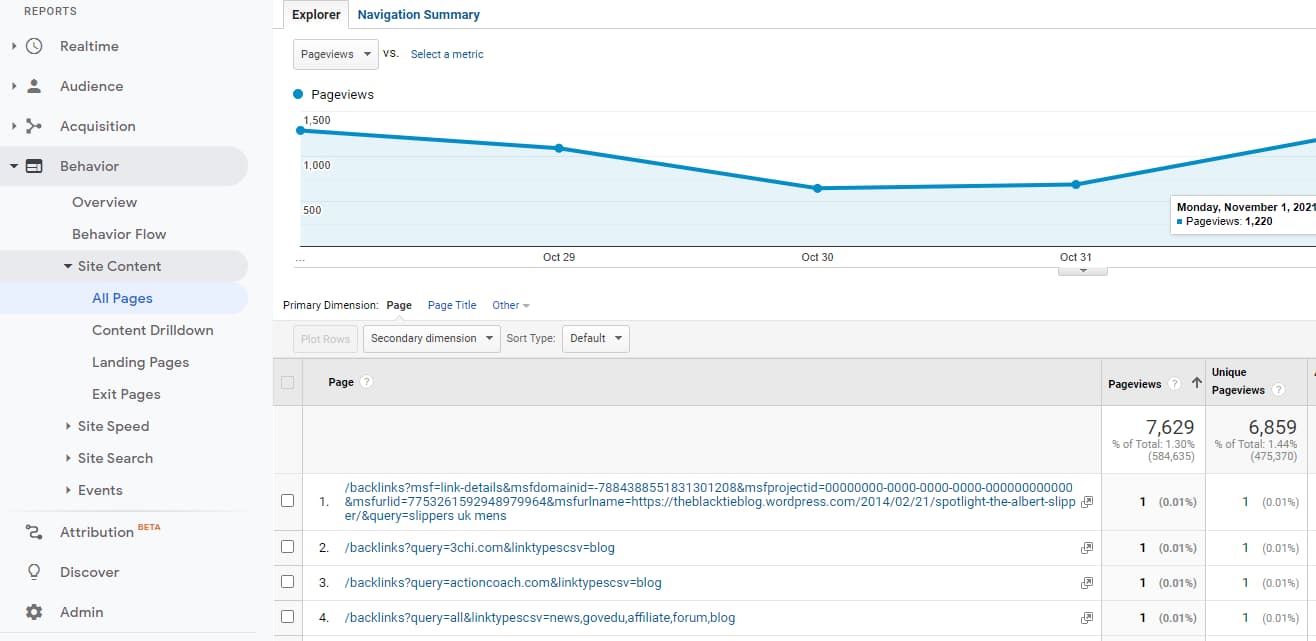

If you don’t know where to start, consider using Google Analytics to find your pages that get little or no traffic. You can get this information by signing in and then selecting Behavior/Site Content/All Pages. From this screen, you can see every page on your site and how much traffic it has had in the given time frame (which you can adjust in the top-right corner).

Any underperforming pages should be placed on a list to be refreshed, no-indexed, or removed.

If you are using a CMS, you should also note the types of pages that are unnecessarily indexed as well.

There might be a setting in the backend that you can change to fix the issues, rather than manually deleting or no-indexing hundreds or thousands of pages.

#4 Robots.txt and Robots Meta Tags

Robots.txt files tell search engines like Google what sections of your site you want them to crawl and index and which ones you DON’T want them to crawl (these pages will still be indexed if anything links to that page).

Since your robots.txt file is so important when it comes to indexing your site, any accidents here can cause compounding SEO problems. Once, we accidentally set a bug in motion that basically told Google not to crawl our main site. This bug, which was the programming equivalent of turning off the wrong light switch, told Google to de-index hundreds of thousands of pieces of content pretty much overnight.

This lasted for five days without us realizing it. We found the problem and fixed it, but the damage was done. We went from 500,000 pages indexed to 100,000. We got some of that traffic back. But not all of it, and it was a crushing blow and an important lesson.

To avoid this, use a service like TechnicalSEO’s text checker, which automatically checks your robots.txt for changes and notifies you if it detects any changes.

If you want to de-index specific pages, Google recommends using robots meta tags which “lets you utilize a granular, page-specific approach to controlling how an individual page should be indexed and served to users in search results.”

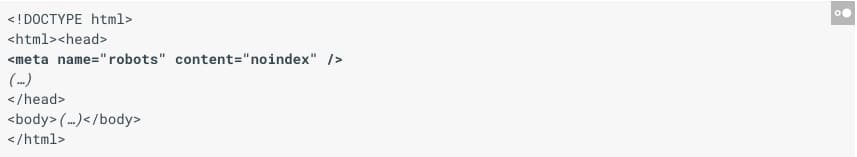

All you need to do is add the robots meta tag into your header like in the image below.

By adding this meta tag, you’re telling most search engines that they shouldn’t use this page on their SERPs.

Another option is to use Noindex: in the robots.txt file. Google doesn’t officially support this, but we’ve found that the command has worked for us and others.

#5 Check Your Page Speed and Load Times

One of the best moves for your SEO is to optimize your pages’ load times, considering Google now lists page speed as an official ranking factor. An easy way to check your website loading speed is to use a tool like Google PageSpeed Insights.

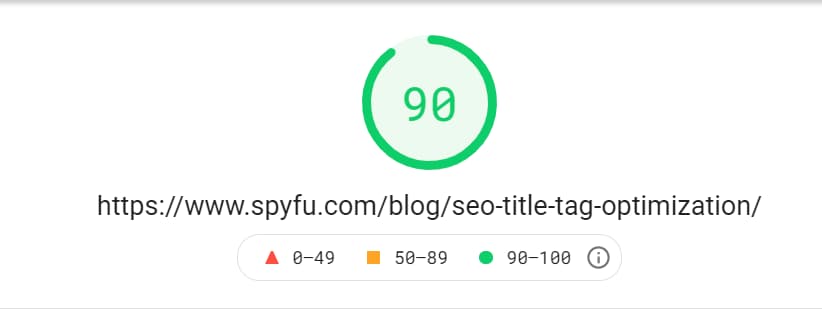

Simply type in the URL and run the test.

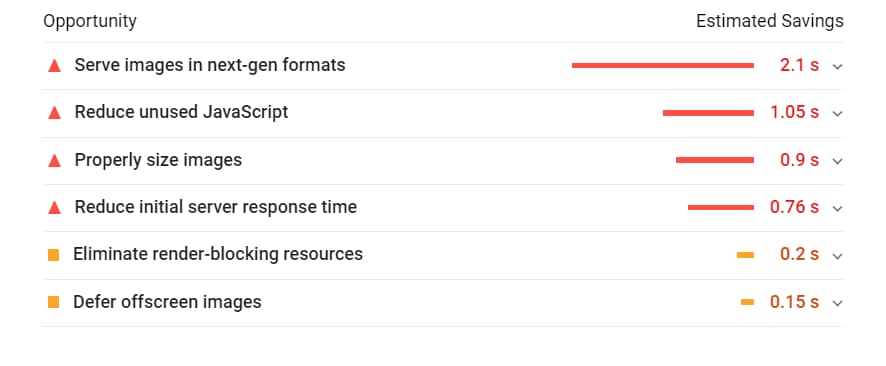

As you can see, PageSpeed Insights is giving us a pretty good grade, 90 out of 100. But that doesn’t mean there isn’t room for improvement (there always is). Scroll down, and Google will tell you what you can do to make your load time even faster.

Fixing the issues Google finds can be as simple as installing a caching plugin (for clunky WordPress installations), downsizing video and image files, installing Lazy Loading scripts, or as complicated and expensive as moving servers. So, pick your battles, focusing on easy wins on your most important pages so you can get the maximum impact for the least amount of time invested.

#6 Test Your Mobile-Friendliness

With more than half of searches happening on mobile, fixing mobile-friendliness issues should be one of your top priorities, especially if they affect any of your top pages. Mobile-friendliness is one of the main Page Experience signals that Google takes into consideration when ranking websites. If your website is not mobile-friendly, it Google may demote it in search results for users who access it via mobile devices.

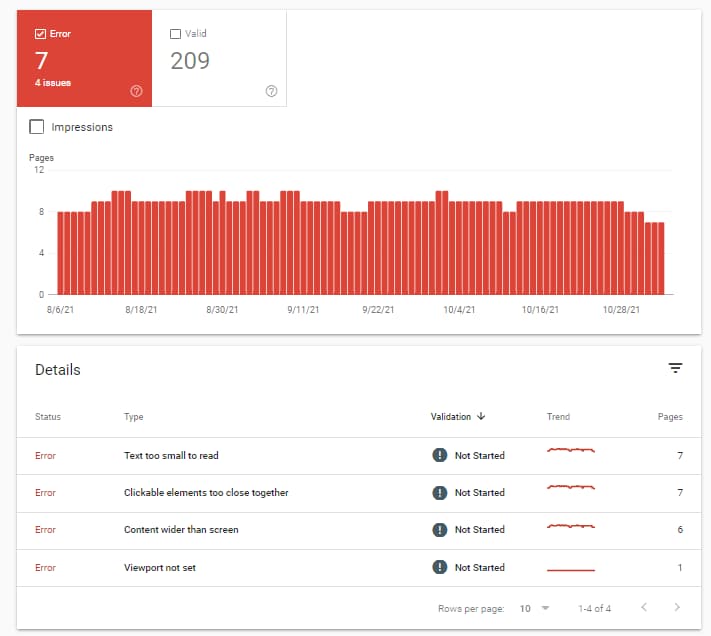

To check if your website is mobile-friendly and if there are any issues, you can use the Mobile Usability report in Google Search Console.

To access it, go to the left menu and click on the "Experience" section. Then, select "Mobile Usability" to see an overview of how optimized your website is and how your collective website has performed over time. The report will also identify any specific issues that affect your website's mobile usability like load time and jumping elements on the page.

From here, Google lays out which pages of yours have mobile-friendliness problems. Click through to see what the problem is, how to fix it, and then to validate the fix so Google can check to make sure that your site has improved.

If you are using an old theme in an old installation of WordPress, update your theme to a newer version. This should adjust your content for mobile viewers. If you don’t have the expertise to fix the issue, contact a developer to help you sort this out.

When you’re done fixing these errors, validate them yourself. Look up your top landing pages on Google and see if they’re loading well on your phone, tablet, or other mobile devices. This might also help you see if there are any optimizations you can make to improve your conversion rates on these landing pages.

#7 Elevate Your Core Web Vitals

Google intends to promote good user experience as a major factor in its search results. Its Core Web Vitals feature helps website owners find and resolve any issues that keep them from delivering positive UX (user experiences).

Core Web Vitals are a set of three performance metrics introduced by Google in 2020 (and updated in 2024), designed to measure the page speed and user experience of websites. These metrics include Largest Contentful Paint (LCP), Cumulative Layout Shift (CLS), and Interaction to Next Paint (INP).

LCP measures how long it takes for the largest piece of a page to load, INP measures the delay between the user's actions on a page and the browser's response to it.

CLS measures the visual stability of the web page when it is being loaded. If you've seen those pesky buttons that jump right when you try to click it, that's the target of CLS.

This report is divided into desktop and mobile, and it showcases any issues that might be negatively affecting your website's performance. The report lists all the affected pages that have severe issues, flagging the issues that need urgent attention.

The feature also differentiates between pages that have minor issues and those that have severe ones.

Core Web Vitals provide website developers with a framework to identify and improve key aspects of their website performance that impact user experience. The better the user experience, the higher the likelihood of users staying on a website longer, engaging with its content, and ultimately converting.

Since Core Web Vitals are now a ranking factor for websites, it's crucial to consider these metrics when conducting an SEO website audit. By analyzing and improving on the elements measured in CWV, website managers can improve their website's rankings and visibility on search engine results pages, ultimately driving more traffic and increasing overall business revenue.

#8 Get Rid of Structured Data Errors

There is a wide range of pages on your domain that could benefit from including structured data. These include but are certainly not limited to things like product or service reviews, product or service information or description pages, pages that outline an upcoming event that you’re going to be taking part in, and more.

If you have no idea what we’re talking about, we've got you covered. Head over to our beginner’s guide to schema markup to learn more.

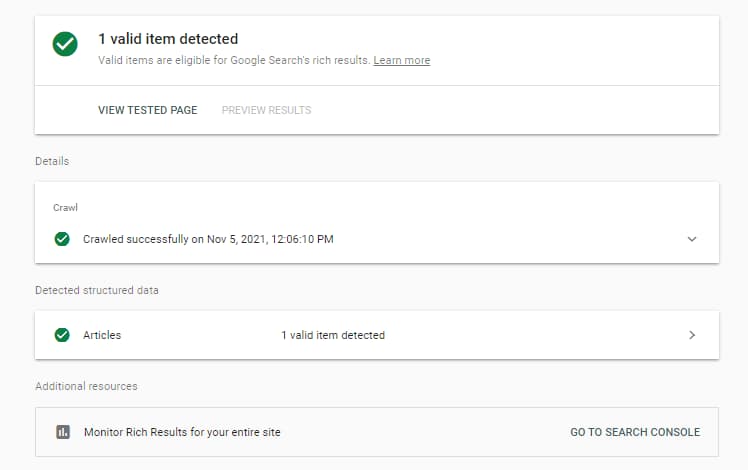

If you do, then you’ll want to audit your current website for any structured data errors that might be holding it back. Head over to Google’s own Structured Data Testing Tool and enter the URL of a site you want to check. Click the option labeled “Run Test,” and Google will not only evaluate the structured data for the domain you just entered — it will also provide you with any errors that were found at the same time.

If any errors were uncovered, do whatever you need to do to fix them to finish up your technical SEO audit. Luckily, Google’s tool will tell you where. If you built your site yourself, dive back into the code and make the necessary changes. If you hired someone to do it, hand them the report you just received and let them get to work — it’s a good starting point, and again, the impact you’ll experience will be huge.

#9 Test and Rewrite Your Title Tags and Meta Descriptions

Title tags and meta descriptions are the info that gets displayed on Google’s SERP. You want to make sure your title tags and meta descriptions are unique and intriguing to help increase your pages’ CTRs and organic traffic.

Testing and rewriting your title tags and meta descriptions is an easy win for SEO because it’s quick to implement and requires no technical SEO knowledge. First, you want to check that none of your pages have the same title tags or meta tags. You should’ve already done this in our second step, but if not, go back and do that now. You can find the instructions on how to find duplicate title and meta tags here.

Next, we want to punch up your top pages’ title tags and meta descriptions to make it more likely that people opt to click through to your page from the SERP. Remember that meta description information is designed to give people a very clear idea of the content you’re offering, thus encouraging them to click. Keep those descriptions short, sweet, and to the point — and also make sure they’re unique as well.

Some basic recommendations are:

- Title tags and meta descriptions should stand out on the SERP and describe what the page is about.

- Your brand name should be included in your title tag to improve click-through rates.

- Title tags are about 55–60 characters, and meta descriptions are about 130–150 characters long.

Learn more about creating stellar title tags and compelling meta descriptions in our in-depth guide to meta tags.

When you think you’ve crafted the best meta tags for your pages, the world doesn’t stop there. Set a reminder to come back after two months and see how your CTR has been affected by your changes. Keep on adjusting and testing your top pages to get even more organic traffic to your site.

#10 Analyze Keywords and Organic Traffic

Understanding which keywords bring in the majority of your organic traffic can help you in two ways: you get a shortlist of your most important pages where you can focus your on-page SEO audit, and you can understand what kinds of keywords work for your audience so you can find similar keywords that can bring you even more traffic.

Your top keywords might not be the ones you expect. Most websites get the majority of their traffic from minor keywords. Long-tail searches account for 91.8% of all search queries done on the web. And over 50% of searches are three words or longer. This tendency means that you probably get most of your traffic from lower volume, long tail keywords.

The first step to making the most of this trend is to do an in-depth analysis of the keywords that already drive traffic to your site.

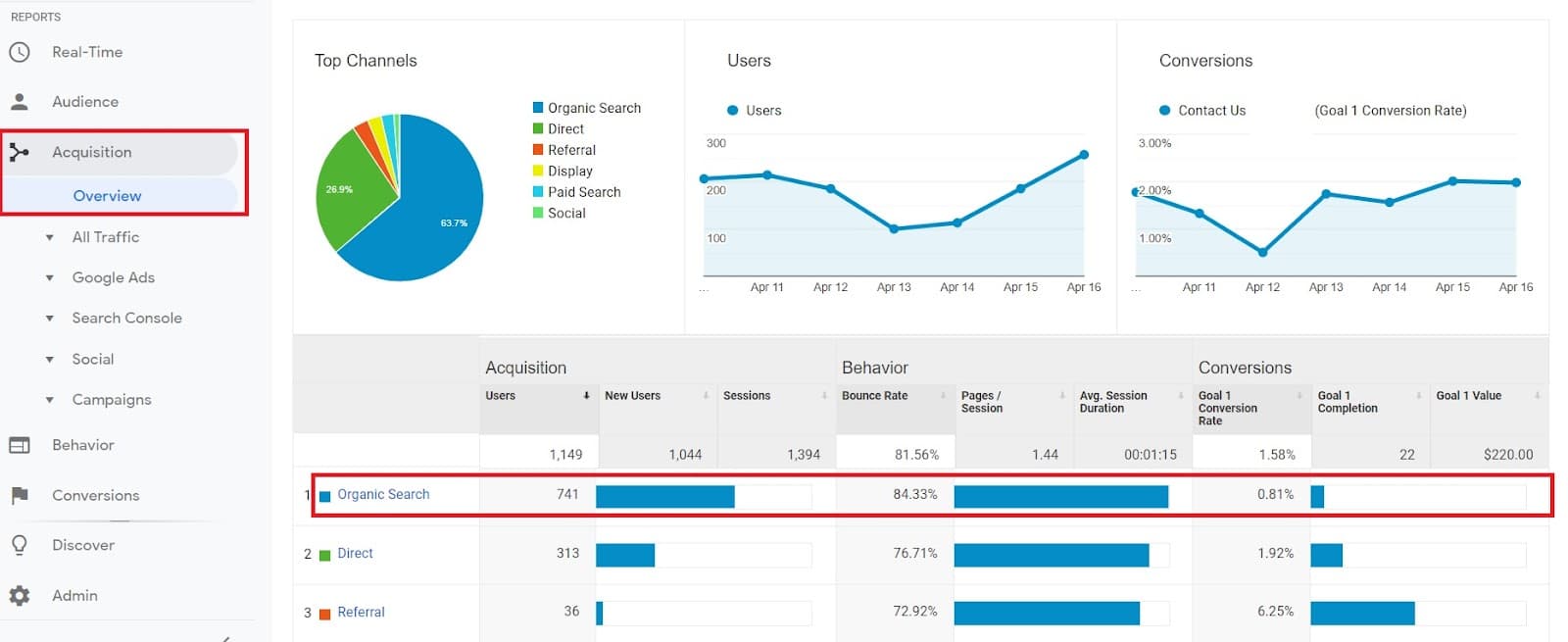

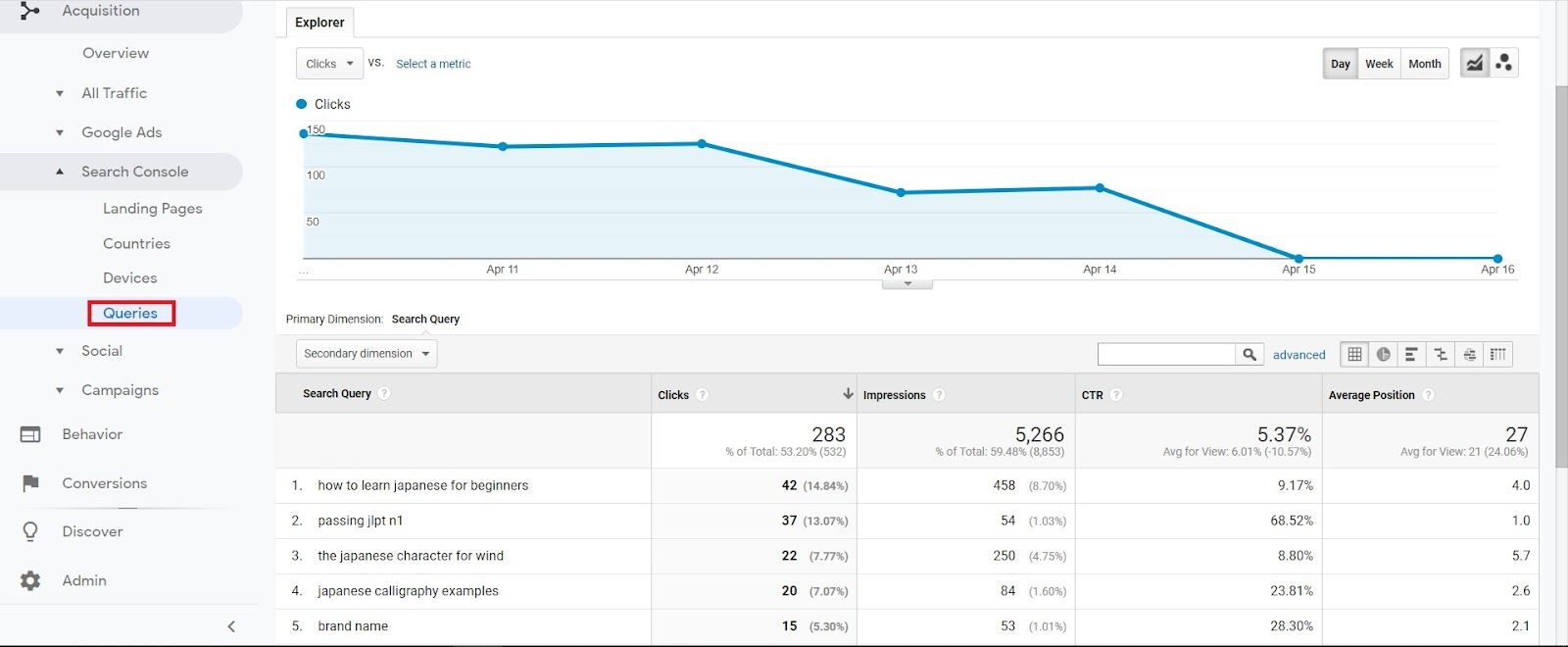

If you have connected Google Analytics with Search Console, you can analyze your keywords from within GA. Simply open up the Acquisition breakdown, and click through to organic search.

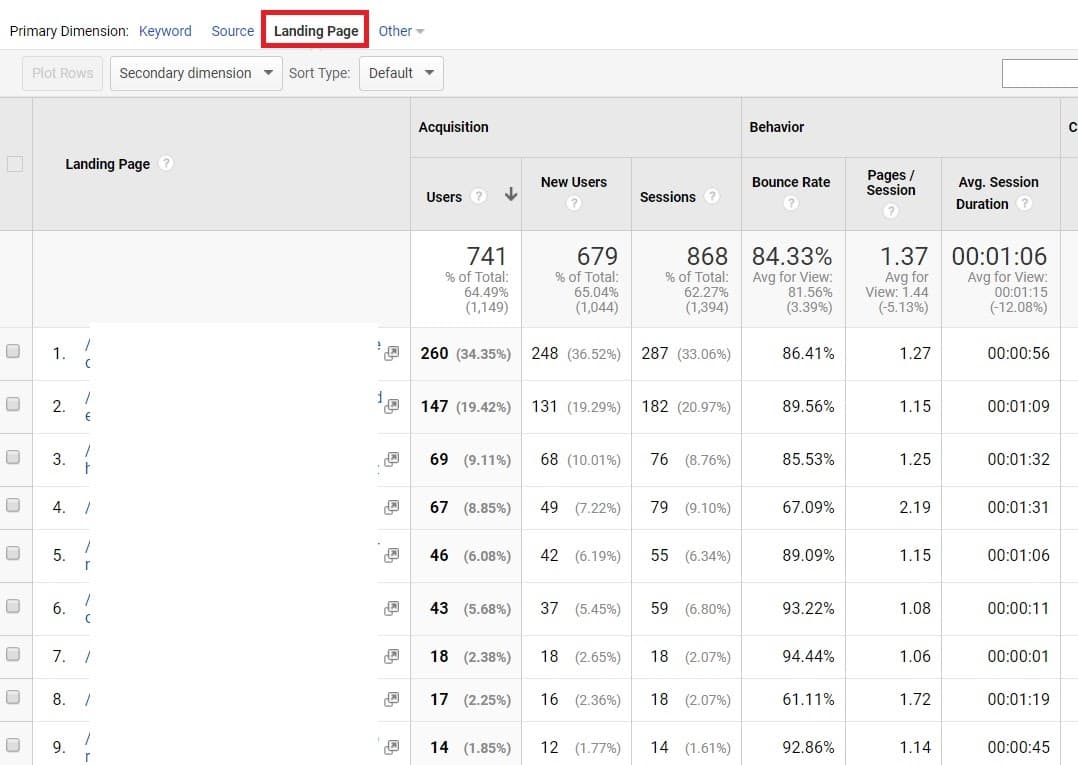

Once you’ve arrived at the channel breakdown for Organic Search, select “Landing Page” as the primary dimension. (The Keyword view almost always shows “not provided” now and is not very useful.)

This will give you an overview of the most important pages on your site for organic search. Then you can cross-reference these results with the queries breakdown in Search Console.

Since Google doesn’t directly tie queries to landing pages in Search Console, and GA no longer displays the keywords searchers used on Google, this roundabout way is one of your best options.

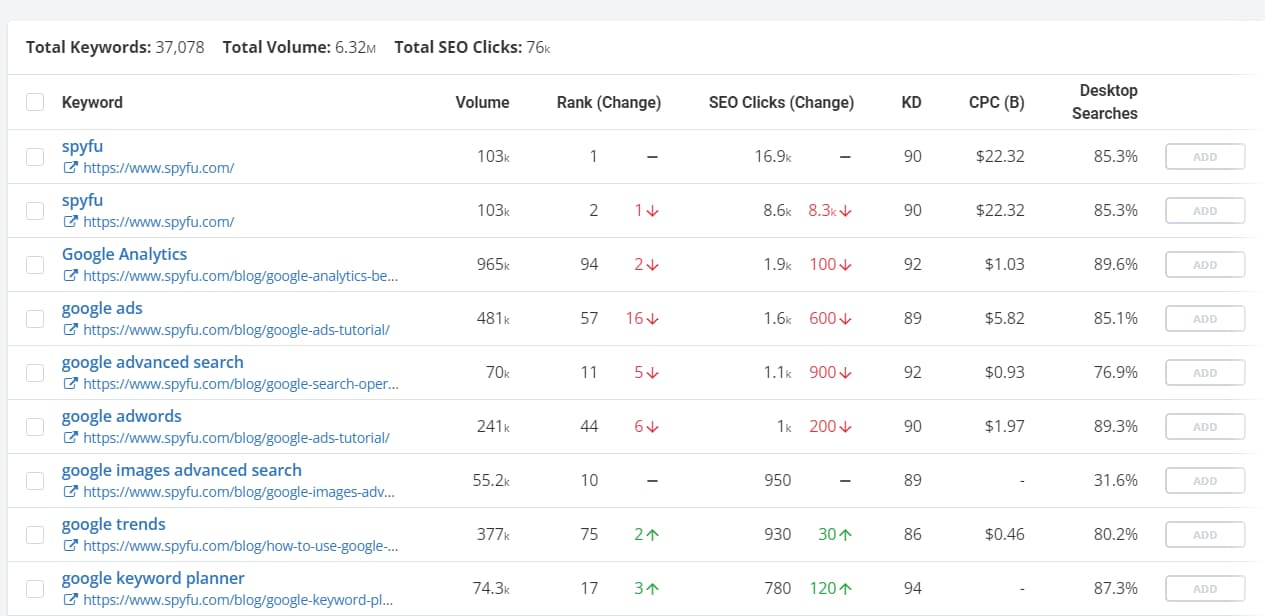

There are also growing options available through keyword tools. We suggest SpyFu for its advanced metrics and filtering capability.

Just type in your URL, and you will instantly get a breakdown of your most valuable SEO keywords. The built-in sort lets you see the most valuable keywords first. These are the ones that bring in the most clicks from your position on them. The only caveat is that the traffic metric will be limited to estimates, whereas your GA traffic is based on real data. However, it supplies insights that Google does not.

By keeping track of these high-value keywords — and saving them in the SpyFu project manager — you get regular performance updates on your most important phrases.

You can also learn from your top keywords and see if there are other areas you could move into that could bring you even more relevant search traffic. Ask yourself:

- What keywords are bringing in the most traffic?

- Which landing pages (and the keywords associated) are leading to the highest number of conversions?

- How do my current top keywords help me achieve my SEO goal?

After that, use SpyFu’s Related Keywords tool to find new keywords that old pages could be re-optimized for or that could become the primary keyword in their own piece of new content. Since you now know what has worked in the past (your current top keywords) and your SEO goals, spotting keywords that share characteristics with the first and dovetail with the second could be your next big boost in SEO traffic.

Learn more about finding valuable keywords in our guide to keyword research and finding your most profitable keywords.

#11 Learn From Your Competition

Spying on your competition is the simplest way to find keyword opportunities out of the billions of search terms out there. The top one billion search terms only make up 35.7% of total searches. When the other 99 billion+ terms make up the brunt of searches, there are too many to find with normal keyword research and ideation.

So how do you know which keywords to target, day after day, year after year? “Borrow” a little from every one of your biggest competitors.

If you are new to SEO competitor analysis, don’t worry. With SpyFu, it’s no longer complicated.

Type in your URL, press enter, and voila, your organic search competitors are served on a silver platter. Or rather, they are arranged in the form of a numbered list.

Once you have this list of competitors, you can do a few things.

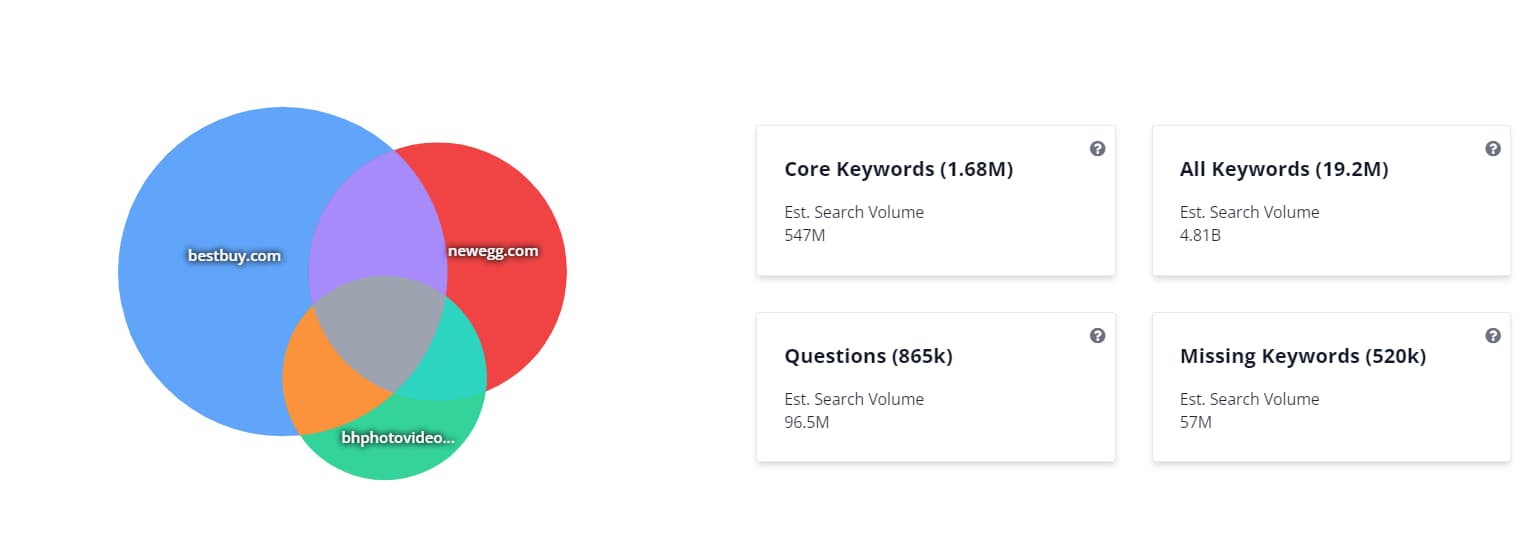

You can painstakingly go through the SERPs for important keywords and try to figure out where you’re lacking. Or you can use SpyFu’s Kombat to instantly find keyword opportunities that your competitors use.

The Kombat breakdown compiles a list of all the keywords targeted by three separate domains (yours being one of them).Then it filters the keywords into three lists:

- Keywords everyone targets.

- Keywords your competitors are targeting, but not you.

- Keywords one competitor is targeting.

The second is the most valuable and often contains gems you can turn around and create a content calendar with.

Then, if you notice a single website is killing the competition in organic search, you can use our Keyword Research Tool to find opportunities from just that one site.

#12 Improve Content With an On-Page SEO Audit

Google remains consistent in its message to SEO: create clear, authoritative content that people want to read.

On-page changes can deliver real results with much less investment. In a recent case study, on-page improvements alone led to a 32% increase in organic traffic. Breaking up content and adding relevant H2s/H3s/H4s alone lead to a 14% improvement. Whether it’s natural language optimization or fresher phrasing, your content benefits from modern updates. And ultimately, so does your SEO.

A great tool that can help you improve your content is MarketMuse. It will help you strike a nice balance between SEO concerns and the actual content.

It analyzes all of the pages on your website and pinpoints areas where the content may need some tweaks.

(Source)

You can also actively analyze single pages against their competition.

(source)

It automatically suggests topics that you should include in pages and posts to get a fighting chance in the SERPs.

If you aren’t interested in using a tool to improve your content, then there is a lot you can do on your own. Read through your web pages and make sure that all of your content:

- Is written for humans and broken into manageable paragraphs.

- Contains valuable information that readers will want to consume.

- Doesn’t have too many ads.

- Is optimized to encourage conversions and a good user experience.

- Is formatted correctly with good use of header tags, alt texts, and images.

- Has a gripping page title.

- Is free from spelling errors or grammatical mistakes.

For more advice on getting your on-page SEO audit correct, check out our full on-page SEO checklist. Start with important pages like your home page and top organic pages, and then move down your list until your whole website is optimized.

For pure readability concerns, you can run your content through an app like Grammarly or Hemingway before publishing.

This AI-powered proofreading will help you pick up on grammatical errors and make your content easier to read for the humans on the other side of the blue screen.

#13 Analyze and Optimize Your Existing Internal Links

Internal links are like the forgotten step-brother of the SEO audit process. They exist, and sometimes they get a mention, but professionals are often stuck worrying and talking about backlinks.

This is a shame because only optimizing your internal links could improve your traffic by as much as 40%, with a lot less work.

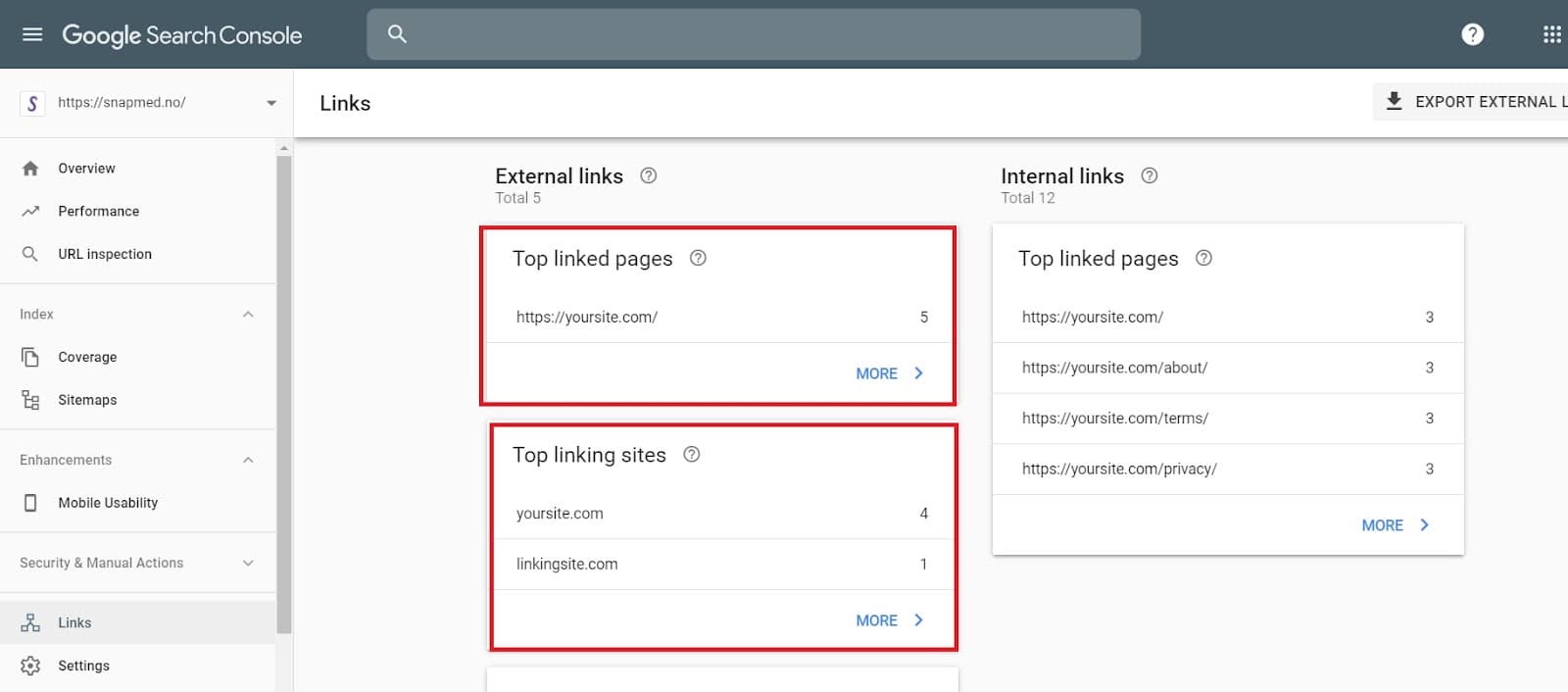

The first step to optimizing your internal links is to understand what links you already have. You can do this with Google Search Console’s link tab found under the legacy tools section.

In this view, you can see a table of internal links on your site. This breakdown will show all pages in a descending order based on how many internal links they receive.

Privacy Policies, Terms, and other pages linked to from the footer/sidebar of your website will inevitably rank highly here.

Google is smart enough for that not to be an issue. Focus on the relevant, original content pages.

- Are you giving too many internal links to content targeting low-volume keywords?

- Does your cornerstone content have too few backlinks?

These are the questions you want to answer by going through this breakdown. Once you know what your internal links currently look like, it’s time to get to work.

The goal is to add relevant internal links throughout your content to connect your pages in a logical way. When done correctly, your site architecture should largely look like this:

When you structure a site this way, kind of like a very flat pyramid, it is an easy hierarchy to understand. At the top is your homepage. You have your high-level category pages underneath that, and underneath those, you have the individual pages that make up those categories.

To give your website this general shape, work on grouping similar content through internal links. Where possible, make sure that the anchor text describes the content it is linking to. So, instead of linking to a page for “Chocolate Chip Cookie Recipes” on the phrase “click here,” you might make the anchor text read “Our complete guide to chocolate chip cookie recipes.”

As well, make sure that each lower-level page links back to your higher-level cornerstone content to help give it an SEO boost and make it easier for users to navigate through your content. So, all of your cookie recipe pages should link to each other where it’s logical and back to a general dessert recipe cornerstone piece.

You should also prioritize improving your internal linking by linking to relevant cornerstone pages as you add new content to your site.

There are many tools you can use to help you with this. For instance, if you use WordPress, SEO by Yoast will suggest internal links to include in a post based on topic relevance. But make sure to keep your site architecture in mind and don’t link too much across categories.

Once you’ve nailed every step of this internal link strategy, you will be rewarded with better rankings for your key pages.

#14 Improve Your Backlink Strategy

It’s not a secret that backlinks are still the backbone of any large scale SEO effort. Remember that links from outside sources tell Google that someone else considers your content to be helpful. That's part of a good user experience. Some experts even claim that as much as 75% of SEO is off-page, and backlinks are still one of the top ranking factors.

That doesn't just mean that Google rewards those with the most backlinks. Context matters.

Google cares if the site that links to you is in the same category, authoritative, and linking to you from inside its own content (as opposed to in a link directory).

This means that buying links from link farms is no longer a one-and-done solution to your backlink troubles. You’ll need to build real backlinks from real sites to get actual results. Here are a couple of ways to get that kind of link building done.

Find Opportunities in Your Own Backlink Profile

One of the easiest ways to build backlinks is to talk to people who have already linked to you in the past. You can do this by returning to the Search Console link tab and focusing on all of your external links.

If you find any high-quality, relevant domains, you can reach out to see if they’d be open to linking to some of your other content.

Steal Backlinks From Your Competitors

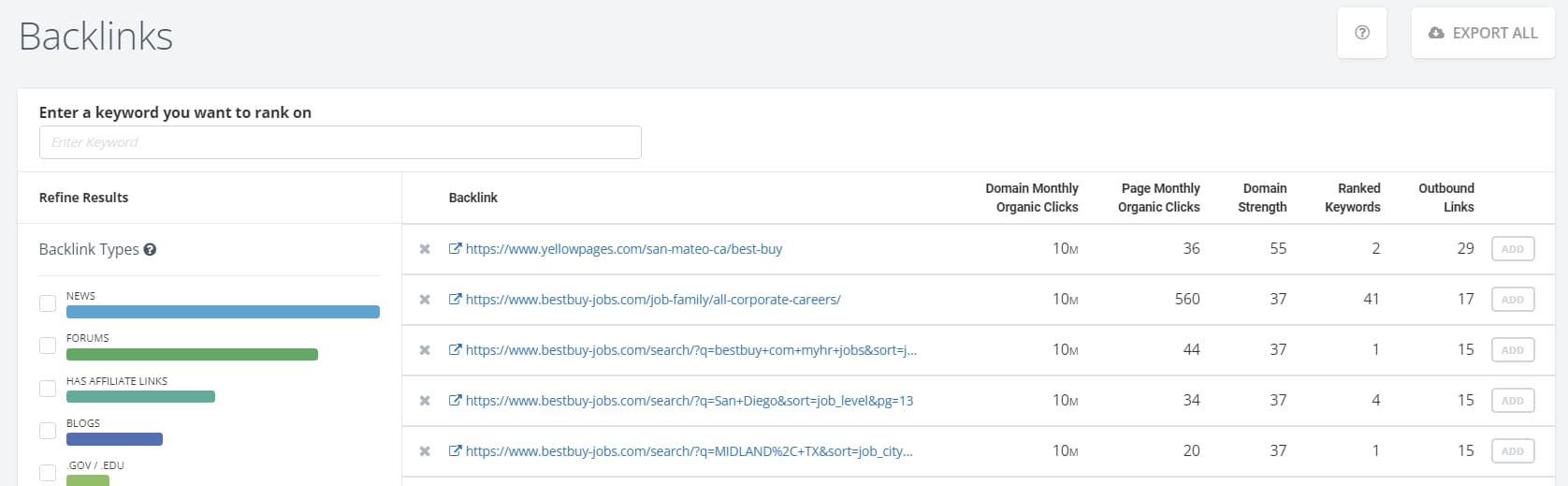

Your competitors’ link profiles can be some of the richest sources of new backlink opportunities available to you today. SpyFu makes finding these kinds of backlinks as easy as pie. Simply head to the Backlinks Tool, type in their URL, and start checking.

If you can find content that has a lot of backlinks but is outdated or simply not that good, you have a potential goldmine on your hands.

All you need to do is one-up the original content and run an outreach campaign to the sites that linked to the original piece. Argue your case, and you just might be able to pick up some backlinks at the expense of your closest competitors.

Stay In Touch With Writers & Influencers in Your Space

This strategy is a bit of a slow burner but is perhaps the strategy that pays the most dividends per second of your time spent.

Chances are you already know of a few, but you can use a tool like Buzzsumo to easily search for more.

Write down the most important ones, or the ones you think you can get along with the most, and make a point out of keeping in touch with them.

This can mean:

- Letting them know about updates as soon as (or before) they come out.

- Informing them they have access to interview your team members if they want it.

- Reaching out to them when your/their favorite team wins the Super Bowl.

Often, you'll have to start that relationship by reaching out to ask for a link. It might seem intimidating, but your best bet is to keep it authentic and concise.

Just make sure you are top of mind when they want to write something about a company in your space. If you nurture these kinds of relationships, more often than not, you’ll start to build backlinks organically through these connections.

#15 Reformatting URLs and 301 Redirects

While it is beneficial to include your keyword phrase in URLs, changing your URLs can negatively impact traffic when you do a 301. As such, we typically recommend optimizing URLs when the current ones are really bad or when you don’t have to change URLs with existing external links.

So, as part of your SEO audit, you should evaluate your current URLs and make sure that all necessary redirects are in place and used properly.

With redirects:

- Use static URLs that are easy to read for humans (no excessive parameters or sessions IDs).

- Keep URLs short (115 characters or shorter).

- Add keywords where they fit.

- Confirm 301s are being used for all redirects.

- Ensure all redirects are pointed at the final URL.

In some cases, good content will be saddled with bad URLs. Unfortunately, changing these URLs to more optimized versions will hurt more than help. For this reason, make sure all new URLs follow the best practices outlined above (short, descriptive, and optimized with keywords).

#16 Track Your Site Audit Results

If you implement changes during this audit without tracking the results, you’re really just blindly hoping for better SEO. The simple act of installing analytics and tracking results is powerful. It can lead to 13% improvements in conversion rate all by itself, controlling for every other factor (no increase in budget or change of staff).And SEO is no different.

If you didn’t track what happened after you implemented certain changes on your website, it would be like operating with a blindfold. You wouldn’t know what you should keep doing or stop doing.

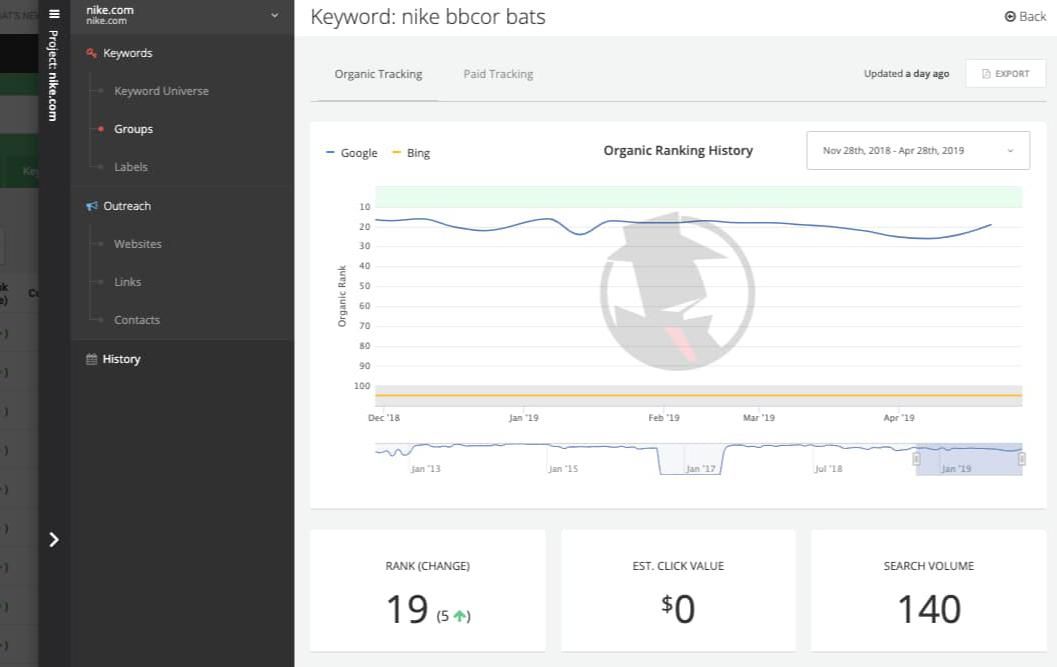

Luckily, SpyFu makes rank tracking easy. Just open the tracking dashboard, and you can see your historical ranks for any keyword.

You can also easily save keywords while doing other things (like checking out your biggest winners). This also lets you get ongoing rank updates (and trendlines) whether you check in or not. Of course, if you need to look back, you can check a website’s past rankings to see how they’ve progressed on a keyword.

Stick to the Basics and Run SEO Audits Regularly

It’s wise to run through your SEO audit checklist regularly because it’ll let you stay on top of your SEO efforts and catch errors as soon as they happen.

There are a lot of details you can focus on in SEO: keyword density, perfectly stuffing meta and alt tags, word length, and everything else that sounds good. However, getting too stuck in these details can have you lose sight of the larger picture.

Great SEO is all about consistency and nailing the basics. If you can run an SEO audit regularly, put in place good practices, track the results, learn from them, and improve gradually, you’ll be in a really good place to get organic traffic and be light-years ahead of someone who is stuck worrying about every “new” ranking factor.